- HOW TO INSTALL APACHE SPARK IN SCALA 2.11.8 HOW TO

- HOW TO INSTALL APACHE SPARK IN SCALA 2.11.8 DOWNLOAD

We need to now set up the conf/nf file so history server uses s3a to store the files.īy default the conf directory has a file, make a copy of the template file and rename it to SPARK_CLASSPATH=$:/usr/lib/hadoop/hadoop-common-2.7.2.jar:/usr/lib/hadoop/client/hadoop-hdfs-2.7.2.jar:/usr/lib/spark/jars:/usr/lib/hadoop/hadoop-annotations-2.7.2.jar:/usr/lib/hadoop/hadoop-auth-2.7.2.jar:/usr/lib/hadoop/hadoop-common-2.7.2.jar:/usr/lib/hadoop/hadoop-nfs-2.7.2.jar:/usr/lib/hadoop-hdfs/hadoop-hdfs-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-client-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-common-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-api-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-server-common-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-server-web-proxy-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-app-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-common-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-core-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-shuffle-2.7.2.jar.:/usr/lib/hadoop/hadoop-common-2.7.2.jar:/usr/lib/hadoop/client/hadoop-hdfs-2.7.2.jar:/usr/lib/spark/jars:/usr/lib/hadoop/hadoop-annotations-2.7.2.jar:/usr/lib/hadoop/hadoop-auth-2.7.2.jar:/usr/lib/hadoop/hadoop-common-2.7.2.jar:/usr/lib/hadoop/hadoop-nfs-2.7.2.jar:/usr/lib/hadoop-hdfs/hadoop-hdfs-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-client-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-common-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-api-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-server-common-2.7.2.jar:/usr/lib/hadoop-yarn/hadoop-yarn-server-web-proxy-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-app-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-common-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-core-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-2.7.2.jar:/usr/lib/hadoop-mapreduce/hadoop-mapreduce-client-shuffle-2.7.2. As we already have $HADOOP_HOME/etc/hadoop/core-site.xml file configured with s3a file system details. MinIO can be used as the storage back-end for Spark history back-end using the s3a file system. If an application makes multiple attempts after failures, the failed attempts will be displayed, as well as any ongoing incomplete attempt or the final successful attempt. Once Spark jobs are configured to log events, the history server displays both completed and incomplete Spark jobs. Spark History Server provides web UI for completed and running Spark applications. _/\_,_/_/ /_/\_\ version 2.3.0 /_/ Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_162) Type in expressions to have them evaluated.

The implementation class of the S3A Filesystem

Useful for S3A-compliant storage providers as it removes the need to set up DNS for virtual hosting. Property, the standard region (s3.) is assumed.Įnable S3 path style access ie disabling the default virtual hosting behaviour. Provided in the AWS Documentation: regions and endpoints. Navigate to the directory where you extracted spark-2.3.0-bin-without-hadoop, and set the following environment variables:ĪWS S3 endpoint to connect to. Then move all the dependency jar files (downloaded in previous step) in this directory. On macOS you can also use Homebrew and existing Scala Formulae. Using SDKMAN, you can easily install specific versions of Scala on any platform with sdk install scala 2.13.8.

HOW TO INSTALL APACHE SPARK IN SCALA 2.11.8 DOWNLOAD

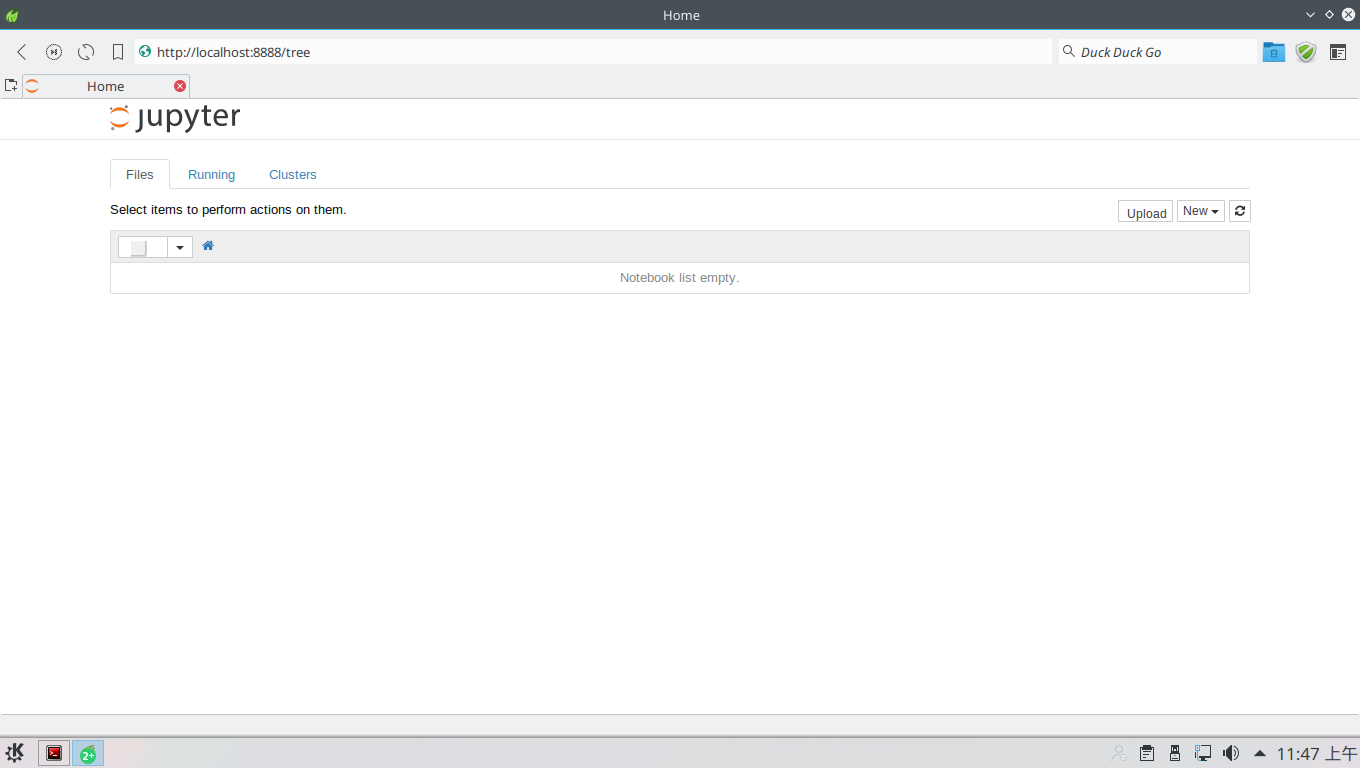

Need help running the binaries Download and use Scala with IntelliJ and then build a project, then test it. To follow along with this guide, first download a packaged release of Spark from the Spark website. Using Coursier CLI, run: cs install scala:2.11.8 & cs install scalac:2.11.8.

HOW TO INSTALL APACHE SPARK IN SCALA 2.11.8 HOW TO

We will first introduce the API through Spark’s interactive shell (in Python or Scala), then show how to write applications in Java, Scala, and Python.

0 kommentar(er)

0 kommentar(er)